Conducted by Fordham’s Senior Research Fellow, Dr. Stéphane Lavertu, this research brief provides an updated analysis of brick-and-mortar charter school performance in the years after the pandemic (2021–22 and 2022–23). He finds that, while their advantage has slightly diminished, charters continue to outperform districts in the post-pandemic years.

For more details, download the full report (which includes technical appendixes), or read it below.

Foreword

By Aaron Churchill

Over the past two decades, researchers have spent countless hours studying the impacts of public charter schools—independently-run, tuition-free schools of choice that serve some 3.7 million U.S. students today. Just prior to the pandemic, studies from Ohio and nationally indicated that charters on average delivered superior academic outcomes compared to traditional districts. And the very finest charters in Ohio and around the nation were driving learning gains that gave disadvantaged students the edge needed to succeed in college and career.

The pandemic scrambled most everything about K–12 education. But did it upend what we know about charter school performance? The present study, conducted by Fordham’s Senior Research Fellow Dr. Stéphane Lavertu, examines the post-pandemic performance of Ohio’s brick-and-mortar charter schools, which enrolled 81,000 students—mostly from urban communities—during the 2022–23 school year.

Dr. Lavertu’s analysis of state value-added data indicates that the charter school advantage has persisted in the wake of the pandemic. In 2022–23, the average brick-and-mortar charter school delivered the annual equivalent of roughly 13 extra days of learning in English language arts and 9 extra days in math. While the size of the ELA advantage (though not math) has diminished since the pandemic, the average Ohio charter school still outperforms nearby district schools.

There, of course, remains tremendous work ahead to help all students—district and charter alike—to achieve at high levels after pandemic-related disruptions. But one thing’s for sure: Supporting—and investing in—high-quality public charter schools remains a strong, evidence-based approach that Ohio should continue to embrace.

Aaron Churchill

Ohio Research Director

Thomas B. Fordham Institute

Introduction

Ohio’s brick-and-mortar charter schools were going strong prior to the pandemic. Rigorous studies demonstrated that student test scores in English language arts (ELA) and mathematics improved significantly when students attended charter schools instead of nearby district schools. Disciplinary incidents and absenteeism also declined significantly. Such results were not mere correlations. These studies used research designs that plausibly unearthed charter schools’ causal impacts on student outcomes. Thus, just prior to the pandemic in the spring of 2020, there was little doubt that Ohio’s brick-and-mortar charter schools were, on average, high-quality alternatives to district schools, particularly for lower-income families residing in our state’s large urban districts.

The pandemic hit the typical school hard, but it hit charters even harder. Charter operators noted that tight labor markets and substantially lower funding (about $5,000 less per pupil than nearby district schools at the time) made it difficult to recruit and retain teachers during these turbulent years. Additionally, the economically disadvantaged students charters primarily serve were disproportionately derailed by the pandemic, in part because of school closures and transportation issues. Research shows that urban district and charter schools serving lower-income students experienced much steeper declines in test scores than the average Ohio public school.

With the pandemic in the rearview mirror, do Ohio’s brick-and-mortar charter schools remain a quality educational option? To answer that question, we need to determine whether the average Ohio charter school student is still learning more during the school year than they would in a nearby district school. Fortunately, Ohio’s publicly available school “growth” measures can help us make such a determination.[1]

This research brief examines how the average achievement growth of Ohio’s charter students compares to that of the average district student. The results reveal that, in terms of student achievement growth, Ohio’s charter schools remain a better educational option for the average charter student. During the 2022–23 school year, the average charter student experienced achievement gains in ELA and math that were approximately 0.02 of a standard deviation greater than students in nearby district schools. That is comparable to the average achievement impact of 0.03 standard deviations associated with increasing school budgets by $1,000 per pupil for four years; but, in the case of Ohio’s brick-and-mortar charters, those gains are realized every one or two years and at no additional cost. Indeed, during the years of this study, the achievement gains came with a significantly lower cost compared to district schools.[2] The estimated charter advantage is roughly equivalent to charter students participating in an additional 13 days of learning in ELA and an additional 9 days of learning in math every school year.

It is important to keep in mind that charter school performance is not what it was in 2019, and the average charter school student experienced significant learning loss during the pandemic. However, the results of this analysis imply that the pandemic-induced achievement gap between higher- and lower- income students would have been even worse without charter schools.

Estimating the impact of Ohio’s charter schools on student achievement

To estimate the causal impact of charter schools, we need to compare their pupils’ learning to the learning of identical students who did not attend charter schools. In other words, we need to make sure that the only relevant difference between charter and district students is the school they attended. It seems like an impossible task, as students who attend charter schools can be quite different from students who remain in district schools. Statewide, charter students are disproportionately economically disadvantaged, and a basic comparison of their test scores to the statewide average doesn’t tell us much about the actual effectiveness of their schools. Comparing students attending charter and non-charter schools in the same district (as opposed to statewide) helps in making apples-to-apples comparisons of their outcomes, as these students are more alike. But significant problems remain. For example, parents who are aware of charter schools and navigate the process of enrolling their children are likely quite different than those who do not. They likely confer knowledge, skills, and other behavioral attributes to their children that lead to higher student achievement, which one might falsely attribute to charter schools when conducting a simple comparison of charter and non-charter students within a district.

Fortunately, research has shown that focusing on achievement gains—what some refer to as student “value added” or “academic growth”—can yield estimates of school effectiveness that are minimally biased.[3] Value-added estimates hold constant a student’s achievement level as of a prior school year, effectively controlling for all differences between students that contributed to different educational outcomes up to that point in their lives. For example, an annual value-added estimate for grade 4 holds constant students’ grade 3 achievement level, which captures what happened in their life that led to their grade 3 achievement, from how well-nourished they were while in the womb, to how much stress they experienced growing up, to the quality of the educational experiences they had through third grade. Research indicates that so long as one focuses on students residing in a similar geographic area, comparing value-added achievement gains between charter and district students should get us close to the impact estimates we would get from an experiment that randomly assigned students to charter and traditional public schools (the “gold standard” for estimating causal impacts).[4]

Ohio’s school-level “growth” measure captures the average test-score gains of schools’ students relative to the average Ohio student. Consequently, one can compare the effectiveness of charter and traditional public schools operating in the same district by comparing these school-level estimates, which are publicly available on the Ohio Department of Education and Workforce (ODEW) website. The primary drawback is that these estimates are scaled differently than those in the academic literature, which makes it difficult to benchmark effect sizes. The appendix describes how I adjusted “gain” estimates (from 2018–19) and “growth” estimates (from 2021–22 and 2022–23) to get the proper scale and render pre- and post-pandemic estimates comparable.[5] It also describes how I used these rescaled estimates to create ELA, math, and science “composite” value-added scores that are comparable to those on Ohio’s school report cards.

The analysis below compares student achievement gains between brick-and-mortar charter and traditional public schools operating in the same district.[6] Note that these estimates are weighted by the number of tested students to account for measurement error. That enables us to speak in terms of the average charter student (as opposed to the average charter school) and approximates the estimates one would get using a student-level dataset. The appendix provides a full accounting of the methods and results that underlie the figures.

Charter performance during the 2021–22 and 2022–23 school years

Takeaway 1: Brick-and-mortar charter schools continue to yield greater achievement gains than nearby district schools, but their advantage in English language arts is smaller than in 2018–19.

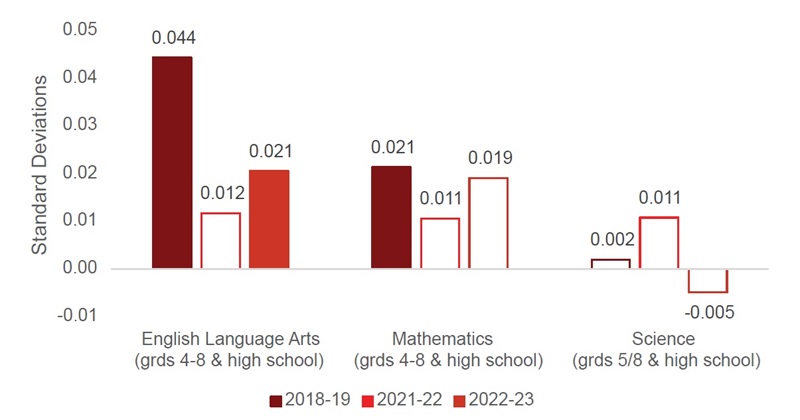

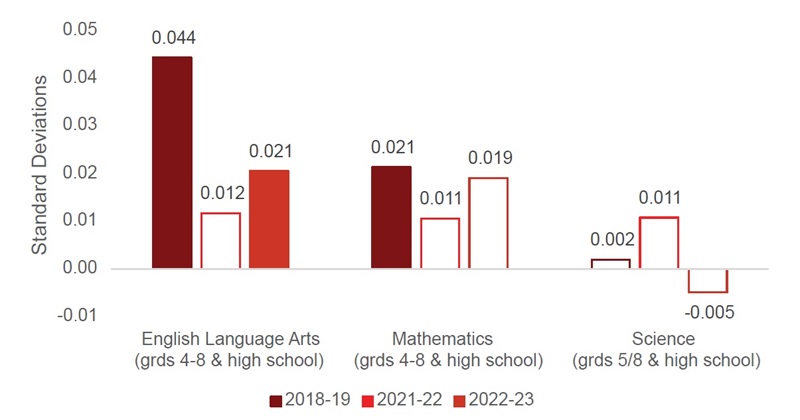

Figure 1 (below) reports the estimated difference in achievement growth between students in charter schools and students attending traditional public schools in the same district. Specifically, it presents “composite” estimates for English language arts (combining results from Ohio state tests in grades 4–8 and the high school ELA 1 and ELA 2 exams), mathematics (combining state tests in grades 4–8 and high school Algebra I, geometry, Integrated Mathematics I, and Integrated Mathematics II), and science (combining state tests in grades 5 and 8 and the high school biology exam).[7] The figure disaggregates estimated effects by subject so that pre- and post- pandemic estimates are comparable. This disaggregation compromises statistical precision and, thus, yields some estimates that do not quite reach conventional level of significance. But the comparisons are nonetheless informative.

The figure indicates that, compared to their counterparts in district schools, students attending charter schools in 2022–23 had test score gains that were 0.021 of a standard deviation greater on ELA exams. Thus, after a notable dip in 2021–22, a charter advantage reemerged in 2022–23. However, that advantage is less than half the size it was in 2018-19—a statistically significant drop. The results also suggest that after an initial dip in 2021–22, charter schools regained their advantage in math of approximately 0.02 standard deviations in 2022–23.[8] The math estimate narrowly fails to attain conventional levels of statistical significance (hence the empty bar) but the results nonetheless suggest that the charter advantage in math is back to pre-pandemic levels.[9] Finally, charter schools performed comparably to nearby district schools on science exams, both before and after the pandemic.

To validate these results, I re-estimated the models using the composite value-added measures publicly available on Ohio’s school report cards (labeled “effect sizes” on the report cards). These additional analyses confirm the value-added estimates in Figure 1 and reveal that pooling all tested subjects across both post-pandemic years (2021–22 and 2022–23) yields a statistically significant charter school advantage of approximately 0.017 standard deviations.[10]

Figure 1. Differences in achievement gains between charter and district students

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

Translating these estimated charter school effects into the more intuitive “days’ worth of learning” metric is not straightforward, in part because of the inclusion of high school exams that are not administered in consecutive years. Nevertheless, with this caveat in mind, the estimates for 2022–23 are the equivalent of charter students participating in an additional 13 days of learning in ELA (as compared to 28 days as of 2018–19) and 9 additional days of learning in math (as compared to 10 days as of 2018–19).[11] These are rough approximations, but they provide some intuition about the magnitude of the post-pandemic charter school achievement advantage in ELA and math.

Takeaway 2: Students in brick-and-mortar charter schools experienced large gains on high school exams (relative to students in nearby district schools), which helped sustain the charter advantage since the pandemic.

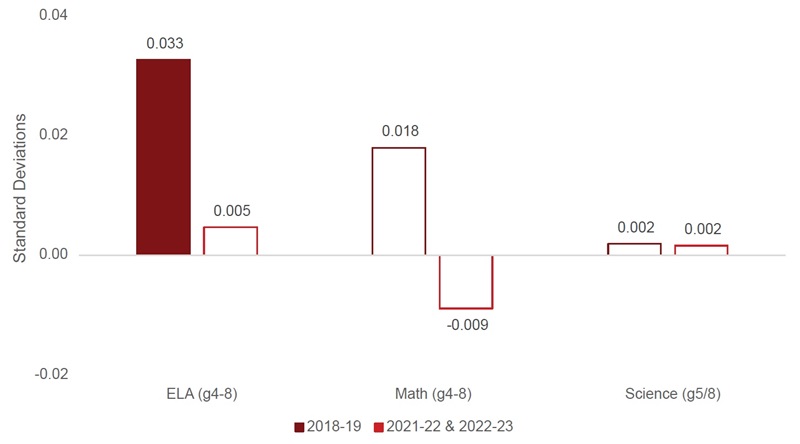

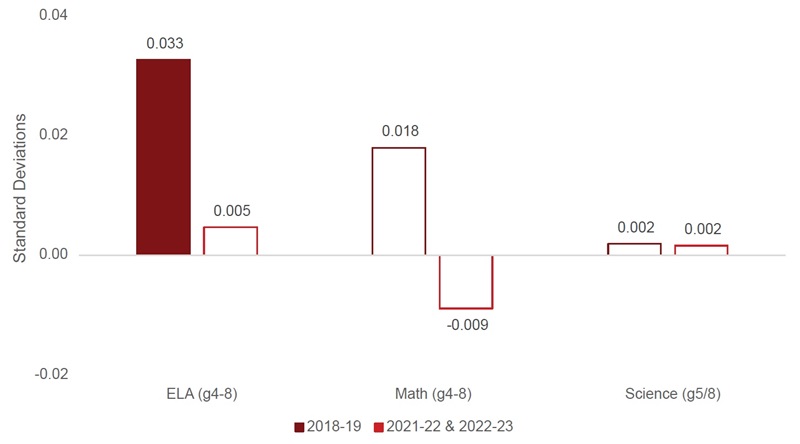

The analysis below disaggregates the estimates in Figure 1 by grade band and subject. Figure 2 (below) focuses on student achievement in grades 4–8 and, to maximize statistical power, pools the value-added estimates from the 2021–22 and 2022–23 school years.[12] These estimates capture annual learning gains. For example, during the 2018–19 school year, the average charter school student gained an extra 0.033 of a standard deviation per year in ELA compared to their peers in district schools. The results in Figure 2 indicate that charter schools’ pre-pandemic advantage for students in grades 4–8 was wiped out in 2021–22 and 2021–23. In other words, considering all post-pandemic years together, charter school students in grades 4–8 learned no more (but no less) than their counterparts in district schools.

Figure 2. Differences in achievement gains between charter and district students (grades 4–8)

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

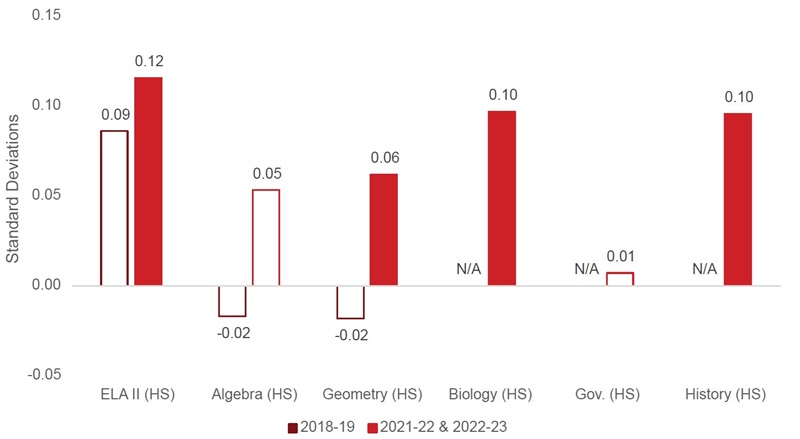

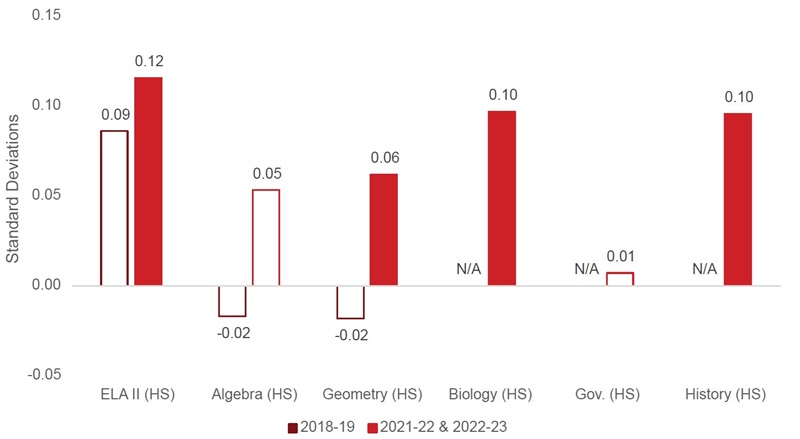

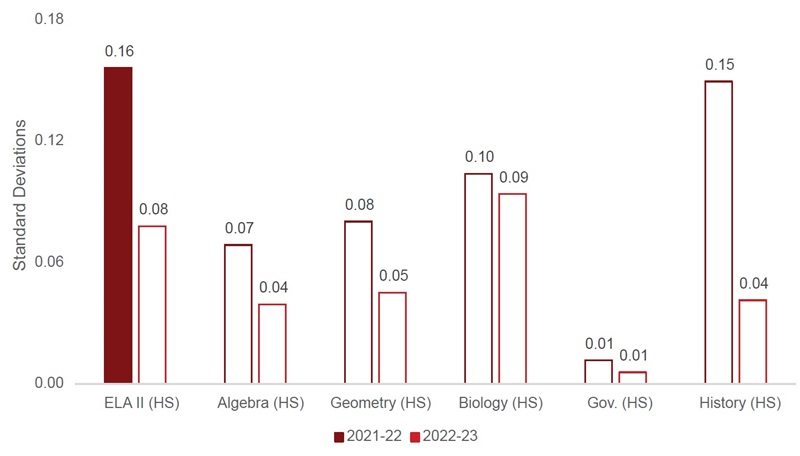

Figure 3 (below) presents the results for tests that students typically take in high school, including some for which we have no value-added estimates for the 2018–19 school year (biology, government, and history).[13] It indicates that the charter school advantage for the 2021–22 and 2022–23 school years (indicated in Figure 1) is driven predominantly by student achievement on high school exams. Interpreting the effect sizes for high school exams is less straightforward than interpreting the annual achievement gains presented in Figure 2, as the value-added estimates for high school often capture multiple years of learning as opposed to annual achievement gains.[14] The difficulties with comparing the estimates in Figure 2 and Figure 3 do not detract from the primary take-home message, however: The 2018–19 charter advantage in grades 4–8 shifted to an advantage in high school during the 2021–22 and 2022– 23 school years. This shift underlies the ostensibly stable charter school impact estimates for mathematics that appear in Figure 1.

Figure 3. Differences in achievement gains between charter and district students (high school)

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

It is important to reiterate that both charter and district students lost significant ground during the pandemic, and that these analyses compare achievement gains between students in charter and district schools. Thus, the large “increases” in charter school value-added on high school exams are due in part to charter students losing less ground than their counterparts in district schools, as opposed to an absolute improvement in the achievement of charter school students. Similarly, the estimates for grades 4–8 indicate that the annual achievement gains of charter students became identical to those of district students, which is a “decline” in the sense that charters lost the (substantial) advantage in achievement gains their students enjoyed as of 2018–19.

Takeaway 3: Brick-and-mortar charter schools’ achievement advantage in grades 4–8 appears to be on the rebound.

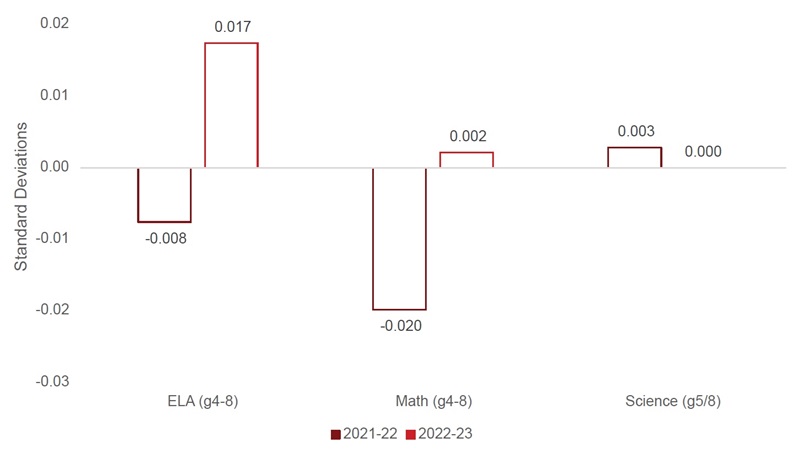

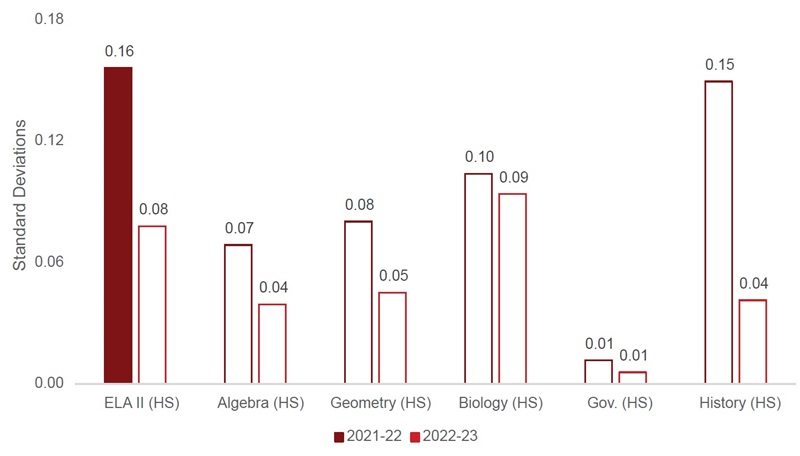

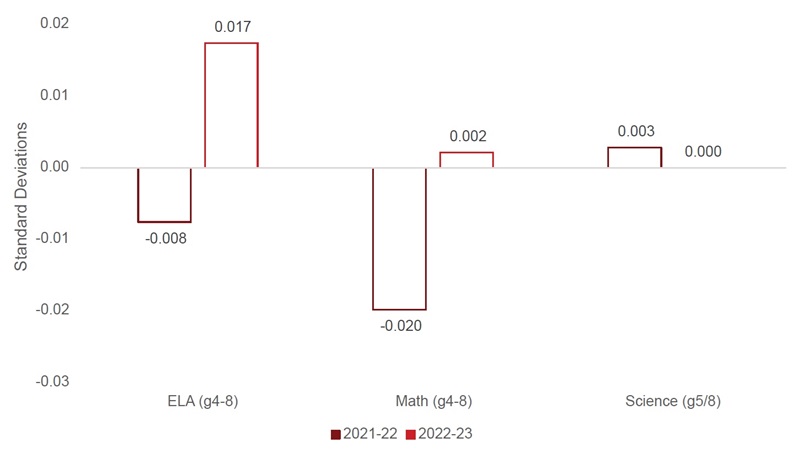

The analysis above pools value-added estimates from the 2021–22 and 2022–23 school years to enhance statistical power. But were there changes in the impact of charters between 2021–22 and 2022–23? Although Ohio’s value-added estimates are generally too imprecise to estimate impacts annually for particular grade bands and subjects, examining the differences in effects between the two post-pandemic school years suggests some interesting patterns. Figure 4 indicates that, from 2021–22 to 2022–23, charter schools’ value-added (relative to district schools) increased by 0.025 of a standard deviation on ELA exams (from -0.008 to 0.017) and 0.022 of a standard deviation on math exams (from -0.020 to 0.002) in grades 4–8. These results contrast with a relative decline from the exceptionally high 2021–22 value-added for high school grades that Figure 5 presents.[15]

It is worth emphasizing that one should not make too much of the estimates in Figures 4 and 5 (below), as they are too imprecise to draw conclusions with statistical confidence (hence the empty bars). For example, the estimate of 0.017 for ELA in grades 4–8 narrowly fails to attain statistical significance; we cannot rule out a substantively significant effect.[16] A charter student with an extra 0.017 of a standard deviation in annual ELA achievement in grades 4–8 would have accumulated an achievement advantage of 0.085 of a standard deviation (5 years x 0.017) by the end of eighth grade. That equates to approximately an additional 49 days’ worth of learning after five years. So, these by-year analyses do not enable us to rule out substantively important charter school effects. Figures 4 and 5 illustrate trends, as opposed to making a conclusive statement about the true impact of charter schools in 2022–23.

Figure 4. Differences in achievement gains between charter and district students (grades 4–8)

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are statistically significant at conventional levels (p<0.05).

Figure 5. Differences in achievement gains between charter and district students (high school)

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are significant at conventional levels (p<0.05).

Note. The figure presents the estimated difference in achievement gains between charter school students and students attending traditional public schools in the same district. Positive (negative) values indicate that the average charter school student experienced larger (smaller) annual achievement gains than traditional public school students. Solid bars indicate that estimated differences are significant at conventional levels (p<0.05).

Summary and implications

The analysis indicates that the average student in Ohio’s brick-and-mortar charter schools continues to learn more than they would in nearby district schools. Indeed, the achievement gains that charters generate in English language arts and mathematics would likely be expensive to realize if, instead of charter schools, we sought to achieve them by increasing funding of district schools. Make no mistake: Students in charter and urban district schools experienced larger achievement declines during the pandemic than students in the average Ohio public school. Charter school students indeed fell behind during this period. However, the results of this analysis imply that the pandemic-induced achievement gap between higher- and lower-income students would have been even worse in the absence of charter schools.

Setting aside pandemic-related learning loss, the results above also serve as a reminder that there is room for improvement in Ohio’s charter sector. Ohio’s charter schools do not provide quite the same achievement advantage over district schools that they did prior to the pandemic.[17] Ohio’s charter schools also remain less effective than those in states such as Massachusetts, New York, and Rhode Island. As of 2019, charter students in those states posted annual achievement gains equal to approximately 41, 75, and 90 extra days’ worth of learning each year, respectively. As I note above, however, as of 2022–23, the charter school advantage in Ohio equals approximately 13 additional days of learning in ELA (as compared to 28 days as of 2018–19) and 9 additional days of learning in math (as compared to 10 days as of 2018–19).

It may be that what has hindered Ohio charter schools is their limited funding compared to nearby district schools, which, among other things, has made it particularly difficult to recruit and retain teachers. Ohio’s substantial funding increases for high-quality charter schools in 2023–24 reduces the funding disparities significantly for these schools. Given what we know about the causal impact of funding increases when the money is spent well, these changes may enable significant improvements in the performance of Ohio’s charter schools.

About the author and acknowledgments

Stéphane Lavertu is a Senior Research Fellow at the Thomas B. Fordham Institute and Professor in the John Glenn College of Public Affairs at The Ohio State University. Any opinions or recommendations are his and do not necessarily represent policy positions or views of the Thomas B. Fordham Institute, the John Glenn College of Public Affairs, or The Ohio State University. He wishes to thank Vlad Kogan for his thoughtful critique and suggestions, as well as Chad Aldis, Aaron Churchill, Chester E. Finn, Jr., and Mike Petrilli for their careful reading and helpful feedback on all aspects of the brief. The ultimate product is entirely his responsibility, and any limitations may very well be due to his failure to address feedback.

Endnotes

[1] The analysis focuses on “site-based” brick-and-mortar charter schools serving “general” or “special” education students. It excludes virtual schools and brick-and-mortar schools focused on dropout prevention and recovery, as there are no district schools comparable to these other types of charter schools.

[2] Ohio significantly increased charter school funding for the 2023–24 school year.

[3] This validation is based on studies that follow students who attended the same elementary schools and, upon reaching the terminal grade of that school, transitioned to charter and traditional-public middle schools. As I discuss below, such a “difference in differences” analysis—which must focus on middle schools—yields 2016-2019 results that are similar in magnitude to those presented in the analysis below.

[4] Within-district comparisons are particularly important in the wake of the pandemic, as recovery from learning loss varies significantly across districts. Indeed, as Table B5 and Table B6 in the appendix reveal, making statewide comparisons suggests that the value-added of charter schools has improved significantly since 2019.

[5] Reassuringly, the estimated effects of attending charter schools (as opposed to nearby traditional public schools) for 2018–19 are similar to those from my 2020 Fordham Institute report, which employs student-level data and, for middle schools, a rigorous “difference in differences” research design that yields plausibly causal estimates of charter school impacts. This correspondence speaks to the validity of SAS’s value-added calculations, which employ all prior years of available student test scores to generate a value-added score.

[6] The analytic sample includes “site-based” brick-and-mortar charter schools serving “general” or “special” education students. The analysis excludes virtual schools and brick-and-mortar schools focused on dropout prevention and recovery.

[7] Although composite estimates include value-added estimates for Integrated Mathematics I, Integrated Mathematics II, and ELA1, there are very few observations for these exams and they have relatively little bearing on the outcomes. The 2019 estimates capture the value-added estimates for schools in operation during the 2018–19 school year, and averages those schools’ value-added estimates across 2016–17, 2017–18, and 2018–19. This is the “three-year” value-added estimate that Ohio makes publicly available.

[8] The composite value-added measures are scaled the same way for pre-pandemic years (2018–19) and post-pandemic years (2021–22 and 2022–23), which enables straightforward pre- and post-pandemic comparisons. Figure 1 reveals a decline of 0.023 of a standard deviation in ELA achievement growth (over a 50 percent drop), but there is no decline in charter schools’ effectiveness (relative to nearby district schools) in mathematics or science.

[9] The estimate reaches statistical significance at the p=0.05 level for a one-tailed test but not a two-tailed test, which is the stricter threshold used in this report. However, given that the purpose of this analysis is to determine whether charter schools continue to outperform nearby district schools, then a one-tailed test is arguably the appropriate threshold to apply.

[10] These results appear in Table B4 of Appendix B. Note that the effect sizes for ELA and math are approximately twice as large as those reported in Figure 1, as the “effect sizes” on Ohio’s school report cards are scaled by the distribution of test-score growth, as opposed to the distribution of test scores. Scaling these estimates by the student achievement distribution reveals an overall charter school advantage of approximately 0.017 student-level standard deviations across all subjects combined, based on Ohio’s overall “composite” value-added measure.

[11] Hill et al. (2007) find that students typically experience annual achievement gains of 0.286 of a standard deviation in reading and 0.369 of a standard deviation in math in grades 4-10. Dividing the estimates in Figure 1 by these typical growth rates yields the fraction of a school year, which one can multiply by 180 (the typical number of instructional days in a school year) to get annual days’ worth of additional learning.

[12] Unlike the two-year composite estimates on Ohio’s school report cards—which pool these years such that 2022–23 gets twice as much weight as 2021–22 estimates—the analyses in Figure 2 and Figure 3 weight 2021–22 and 2022–23 estimates equally. Putting more weight on 2022-23 increases estimated charter school effectiveness relative to district schools.

[13] In the interest of space, the figure omits results for exams that few students took (ELA 1, Integrated Mathematics I, and Integrated Mathematics II) but that are accounted for in the composite estimates.

[14] For example, students who took the ELA2 high school exam in grade 10 during the 2018– 19 school year posted scores that were 0.09 of a standard deviation greater than we would have predicted based on their performance as of grade 8. In this case, the (statistically insignificant) impact estimate of 0.09 for ELA2 roughly translates to a value-added estimate of 0.045 standard deviations per year (learning in grades 9 and 10). This is comparable to the annual estimated ELA effect for grades 4–8 in 2018–19 of 0.033. In other words, the results in Figures 2 and 3 indicate that as of the 2018–19 school year, the achievement gains in ELA relative to district students were similarly large in grades 4–8 and high school, even though the estimates in Figure 3 make the high school estimates appear much larger.

[15] Although the by-year estimates yield primarily statistically insignificant results, the estimated differences between years sometimes attain or nearly attain conventional levels of statistical significance.

[16] It is not significant at the p<0.05 level for a two-tailed test, but it attains statistical significance for a one-tailed test.

[17] Within-district comparisons are particularly important in the wake of the pandemic, as recovery from learning loss varies significantly across districts. As Table B5 and Table B6 in the appendix reveal, making statewide comparisons suggests that the value-added of charter schools has improved significantly since 2019. However, that might be due to the intensive efforts to address learning loss in districts where charter students attend school.