There’s a large gap between the current state of education sector R & D and our aspirations for this research. As sectors, education and medicine have lots in common and analogies are often drawn between the disciplines. However, when it comes to evidence-based practices, there are stark differences between the two fields. For example, in medicine there are landmark clinical trials, for which there are few corollaries in educational research. In my last post, I made the claim that the current evidence base in education research is extremely thin at best and completely misleading at worse. This point was illustrated by following the evidence trail of an elementary reading program (Corrective Reading) rated as Strong on Ohio’s Evidence-Based Clearinghouse. If you closely examine the study, you'll notice that the effects were minimal on important measures like state tests and with low-income and African-American students. Given this, it is hard for me to understand the program’s rating.

Education sector lacks evidence-based practices

One of the primary issues is that purported evidence-based practices in education are often presented as if they have a similar research-base as the aforementioned landmark trials in medicine. That is a significant issue in and of itself. I also followed this issue up with a second claim, that is, by their very design, studies that result in evidence-based practices discount externalities instead of solving for them. This quality improvement issue is shared with the medical community, but it is also critical to keep in mind that the landmark trials in medicine are considered such because of the quality and breadth of the research base backing them up. With the exception of the decades-long work on the science of teaching students how to read, funded by the National Institute of Health, there is not much in the way of equivalent studies in our field.

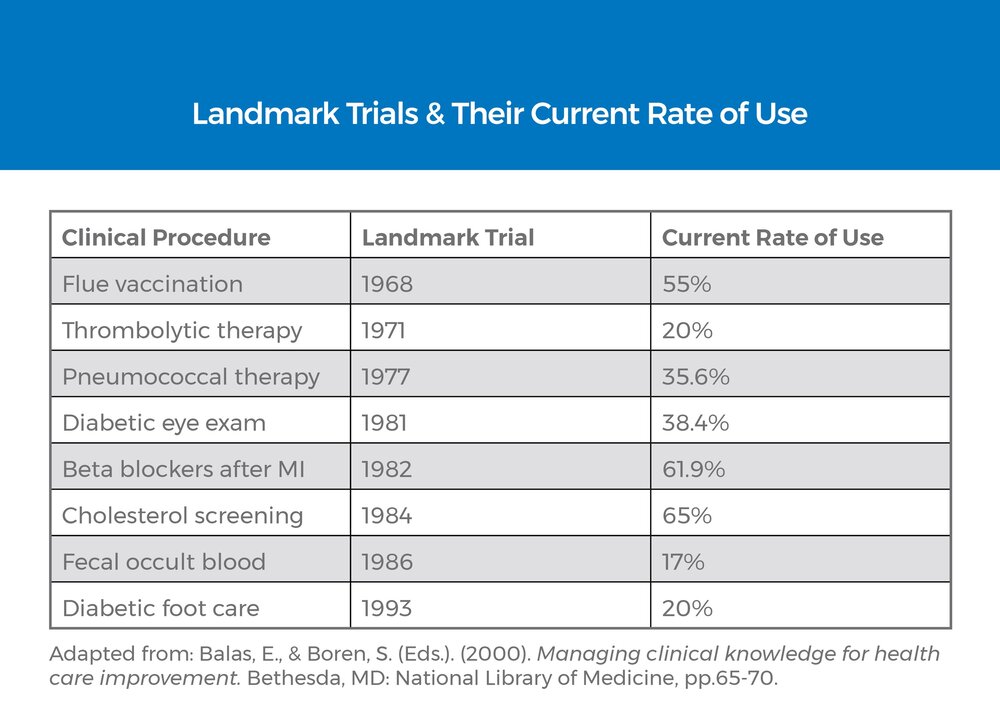

As the table above clearly displays, even when you have seminal studies pointing the way forward for practitioners, there are significant barriers to implementation.[1] In education, we don’t have canons of clinical knowledge underlying our practices and this shortcoming is coupled with the same implementation issues faced by medical practitioners for the kernels of promise that we do have. To illustrate this further, the following graph displays the first-year results from a large randomized field trial of another reading program, Reading Recovery.[2]

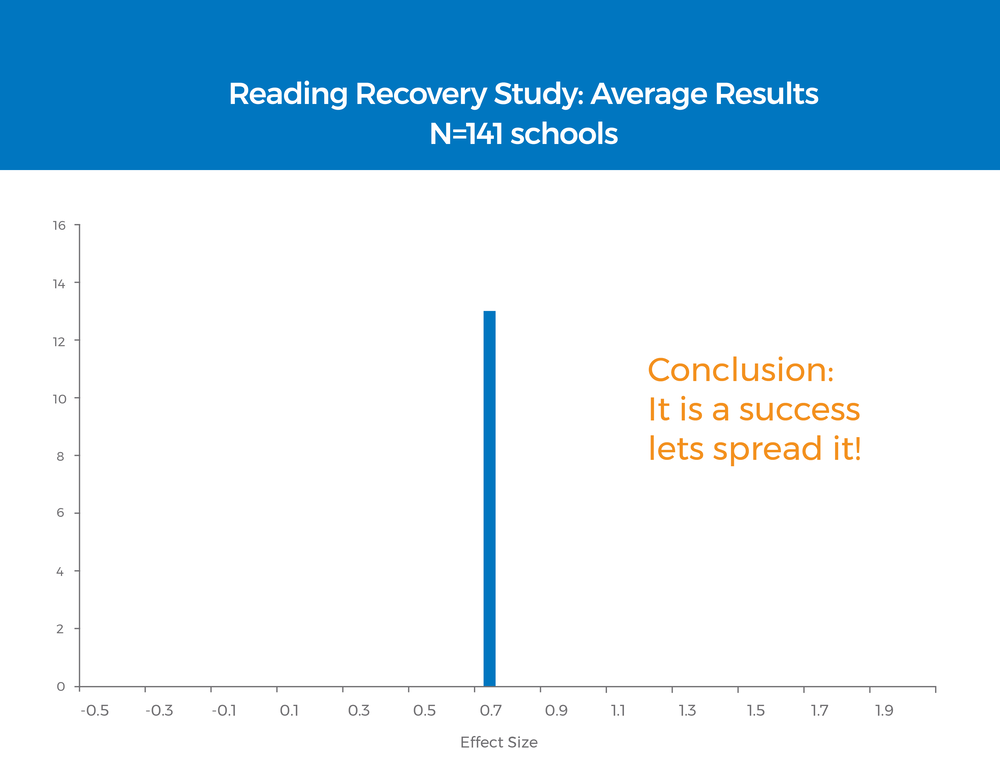

The average effects from the trial are strong. As this research was publicized, it would make sense then that schools with lots of struggling readers would flock to buy the program. In many districts it would be purchased and implemented across as many schools as possible to help those struggling readers. It’s exactly at this point that challenges will begin to emerge. The power of randomization is being able to isolate one factor’s effect, in this case the Reading Recovery program, while purposefully ignoring everything else. The problem is that in complex organizations like schools, the “everything else” is the very thing that causes wide variability in student outcomes. If you look at the multi-site study of Reading Recovery and zoom in on the effect sizes across those sites, this is exactly the picture that emerges.

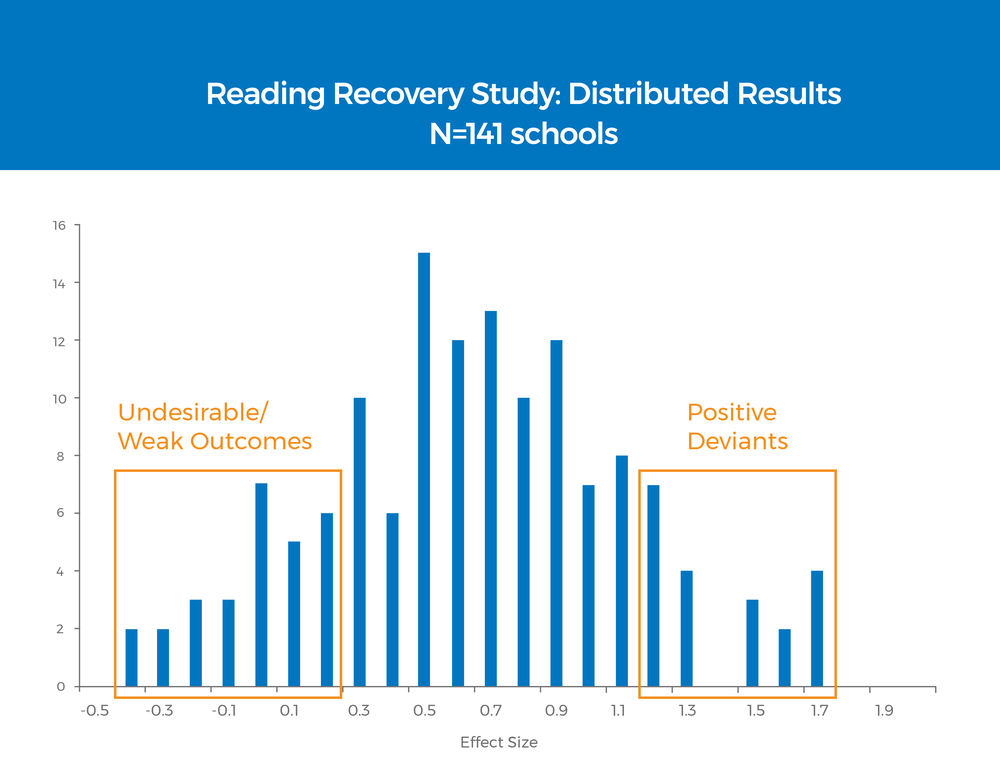

The magical average effect in the first graph now looks like a normal curve. In fact, twenty-eight of the schools had undesirable outcomes. Another twenty of the sites were positive deviants, far out-performing the norm. And then there were ninety-three sites somewhere in between. We would certainly learn a lot from studying the schools at each end of the curve and as is clearly illustrated by this graphical presentation, that study is exactly what I would be interested in as a school leader. Specifically, I’d want to know what was happening on the ground differently in those twenty positive deviants than what happened in those underperforming sites. Viewed in this way, I’d also be far more cautious prior to expending time, money, and human resources on purchasing and implementing Reading Recovery in my district. This is the illustration I want front and center in databases like Ohio’s Evidence-Based Clearinghouse.

A new vision for education R & D

Here again I think we can draw lessons from medicine. There are the R & D practitioners that develop evidence-based practices, like those in the landmark trials. Just as important are the quality improvement practitioners. They are tasked with figuring out how to get these evidenced-based interventions to work in practice and/or ensure they are adopted in the field. The quality improvement specialists don’t ask “why aren’t you,” but rather “why can’t you”? In doing so, they partner with frontline workers to engineer the right environmental conditions and work processes needed to implement the evidence-based practices. Improvement scientists then generate the practice-based evidence using the best of what is available from the R & D sector as a starting point. This is the evidence that grows from practice and demonstrates that some process, tool, or modified staff role and relationship works effectively under a variety of conditions and that quality outcomes will reliably ensue.

These two types of evidence are complementary. Evidence-based practices answer the question “what works?” Practice-based evidence answers the questions “for whom?” and “under what conditions?” Both are key to achieving quality outcomes at scale. So, what teachers, principals, and superintendents really need is the “What Works, for Whom, and Under What Conditions Clearinghouse.” However, even with this adjustment it is of critical importance to acknowledge that the current R & D infrastructure for school improvement is at best weak and fragmented. When we create tools such as Ohio’s Evidence-Based Clearinghouse without transparency around its limitations we may very well be doing more harm than good.

Perhaps it is here that we in education should learn another lesson from our medical counterparts—first, do no harm.

Editor’s note: This essay was first published by the School Performance Institute.

——

[1] Improvement as a Journey: Improvement Science Basics (2016). [PowerPoint Slides]. Retrieved during the 2016 Improvement Summit at the Carnegie Foundation for the Advancement of Teaching.

[2] Bryk, T. (2014). Learning to Improve: How America’s Social Institutions Can Get Better at Getting Better. [PowerPoint Slides]. Retrieved from www.carnegiefoundation.org.