Despite the continued controversy surrounding Common Core, the vast majority of states that originally adopted the standards have chosen to stick with them. But the same can’t be said of several new standards-aligned assessments.

Developed by two state consortia, the Partnership for Assessment of Readiness for College and Careers (PARCC) and the Smarter Balanced Assessment Consortium, these new tests offered member states shared ownership over common assessments, significant cost savings, and the ability to compare student performance across states. Despite this initial promise, however, membership in PARCC (and, to a lesser degree, Smarter Balanced) has been dwindling for some time now. But is this attrition due to the quality of the tests, as some claim?

To inform states about the quality and content of PARCC and Smarter Balanced, Fordham conducted the first comprehensive evaluation of three “next-generation” tests this past summer, recruiting reviewers who examined operational test items from PARCC, Smarter Balanced, and ACT Aspire. We also evaluated one highly regarded existing state test, the Massachusetts Comprehensive Assessment System (MCAS). Our team of rock star reviewers, comprising educators and experts on content and assessment, judged these tests against benchmarks based on the Council of Chief State School Officers’ (CCSSO’s) “Criteria for Procuring and Evaluating High-Quality Assessments.”

We wanted to know: Do the new tests focus on the most important content for college and career readiness? Do they require all students to demonstrate a range of skills, including higher-order skills called for by Common Core? What are each test’s respective strengths and weaknesses?

Our study examined English language arts (ELA)/literacy and mathematics tests for grades five and eight; a parallel study at the high school level was conducted by our colleagues at the Human Resources Research Organization (HumRRO).

So how did PARCC fare? We found:

- PARCC is a very good reflection of the most important content called for by Common Core in both ELA/literacy and mathematics. As we highlight in our report, for ELA, “the program demonstrates excellence in the assessment of close reading, vocabulary, writing to sources, and language.” For math, PARCC is “reasonably well aligned to the major work of each grade.”

- PARCC also matches the cognitive complexity of the Common Core standards and requires students to demonstrate a wide range of thinking skills (including higher-order skills) in both subjects—but particularly in ELA/literacy.

- PARCC tests include a wide variety of item types that are generally of very high quality; they represent a true departure from low-quality multiple choice tests of the past.

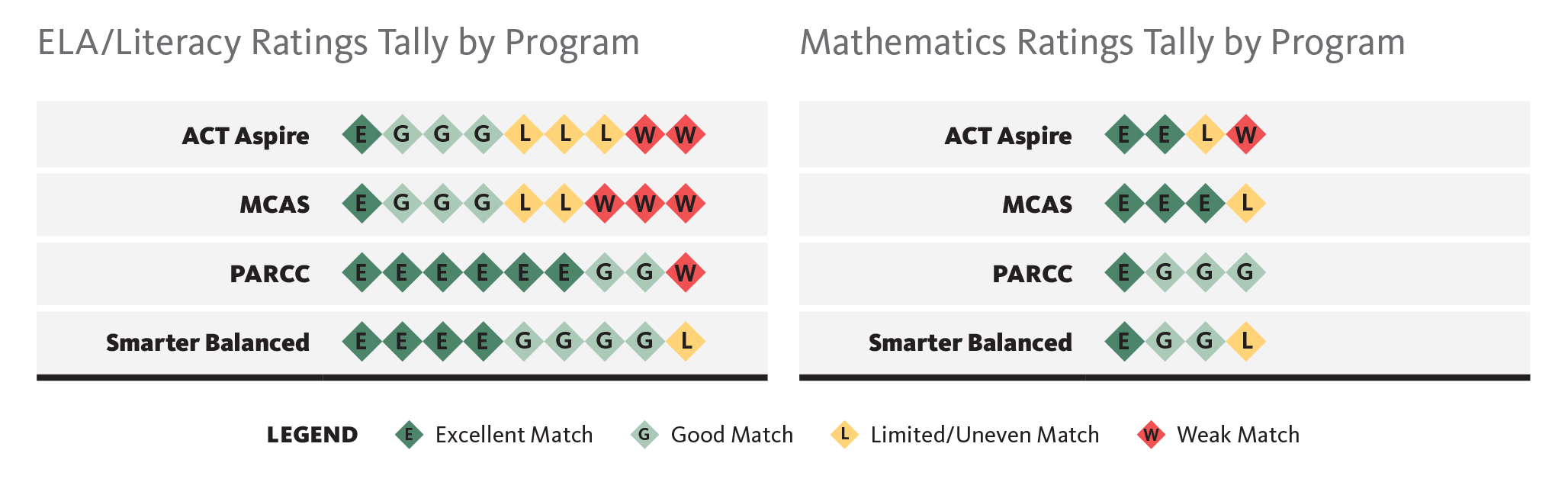

- Overall, of the four tests we reviewed, PARCC earned the highest number of “Excellent Match” ratings to the CCSSO criteria evaluated (see ratings tallies below).

In short, it’s clear that better tests are here, and that PARCC is a high-quality option available to states.

Is it a perfect test? Of course not, and we offer several suggestions for improvement in the report. But as one of the reviewers on our eighth-grade ELA panels commented, “As a teacher being evaluated on whether I am teaching my students the standards, the PARCC assessment would clearly show measurement of my work.“

PARCC has also recently made several changes to its tests in response to member state feedback. Over the last year, it has shortened its tests, combined its original two testing windows into one, and plans to return results to states by July.

As the national testing landscape continues to fluctuate, it’s critical that students, parents, educators, and policy makers have accurate information about test content and quality. Far from claims of questionable quality, we found that PARCC meets Common Core’s high bar for rigor and quality. Other states that have dropped PARCC should demand similar independent evaluations of their own assessments.

As one of our lead reviewers commented, “We should never waste our valuable time in schools giving tests that are not meaningful and closely tied to the skills we practice in the classroom.”

Our full report can be found online here.